Learning within a discipline often creates the illusion of mastery. For a long time, I operated within the boundaries of computer engineering, building up my skills and understanding. But in USP, when I started engaging with people outside my discipline, I encountered questions I couldn’t answer, perspectives I hadn’t considered, and assumptions I didn’t even realise I had. Different disciplines ask different questions, value different insights, and uncover different truths.

Spending an entire year learning and working with AI, I thought I understood it inside and out. It took the peer review during the writing of this reflection to remind me that I still don’t know everything even about the things I thought I knew well, and that there is much more to the world I don’t and may never fully understand. This post captures the moment I recognised that true understanding begins where my knowledge ends, and grows by remaining open to what the world continues to teach me.

AI And I

As a computer engineer, I learnt to develop smart solutions that make things easier for users, through automation or assistance. During my NOC internship, I applied these skills at an AI startup, working as the sole AI developer. I had my hand in every aspect of the technology. And as with most startups, my responsibilities extended well beyond development — into business strategy, user experience, and even product design.

Over time, I developed a comprehensive understanding of how AI solutions are built and deployed. I regularly consulted the CEO about our product roadmap. Outside of work, I attended conferences, connected with industry experts, and began incorporating AI tools into my daily routine. I lived and breathed AI for a year.

At the heart of most conversations I encountered were three key questions:

- How can we use AI to improve processes?

- Which AI technologies best serve our use cases?

- What are the best practices when building AI solutions?

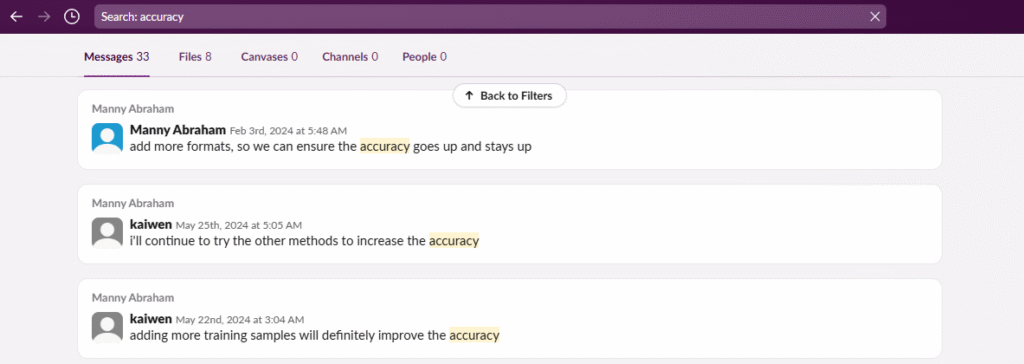

Within the startup, product performance and user value were at the center of everything. They determined the success of our product, and consequently, the survival of the startup. In our team, ‘accuracy’ was the benchmark for performance, and as the AI developer, improving it was my top priority. Here are some messages I exchanged with my boss discussing ‘accuracy’:

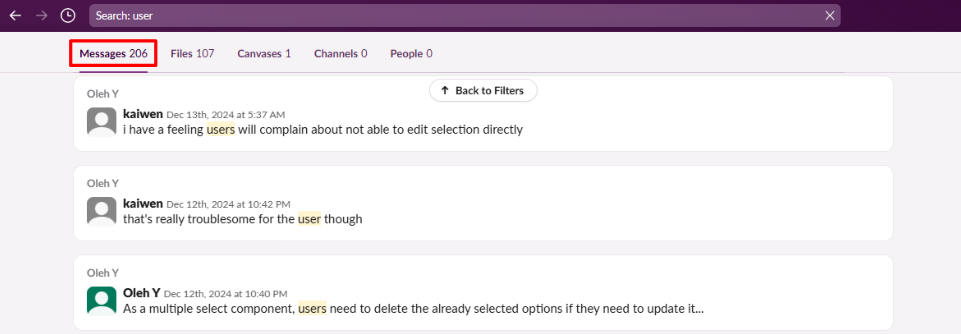

As we built and refined the product, I often debated certain design choices with colleagues , weighing functionality and trade-offs with the user in mind.

Over the course of the year, we mentioned ‘user’ over 200 times. User experience was critical to the product’s success, and we thought about it at every step.

By the end of the year, I had built real-world AI solutions, kept up with the latest industry trends, and understood how AI fits into business contexts. I could confidently answer most questions people asked about AI — its tools, principles, and applications. I thought I knew everything about it.

Blind Spot

The process of writing this reflection revealed that I didn’t fully understand AI, even after a year of working closely with it.

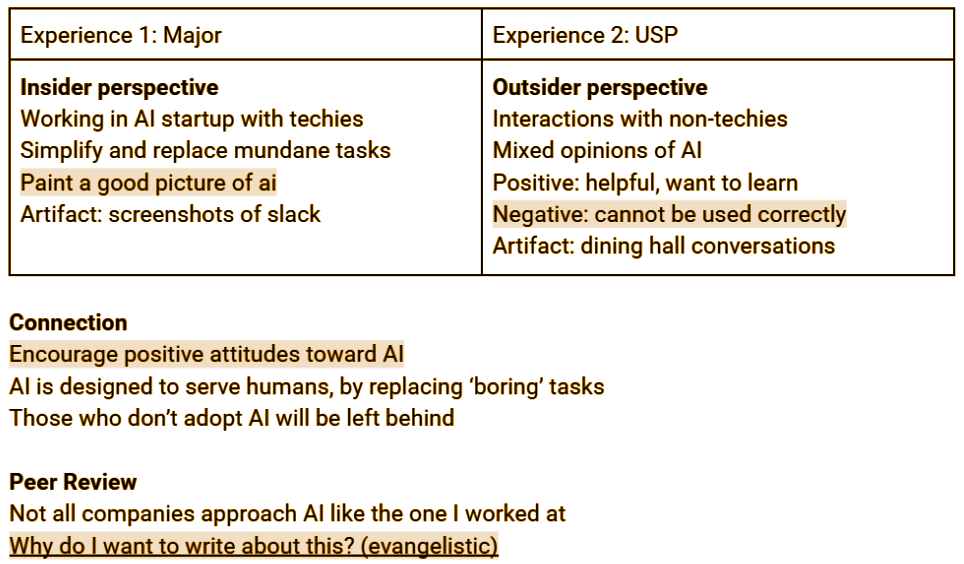

My original idea for this reflection was quite different from what you’re reading now. I initially planned to contrast my experience working as an AI developer, what I called the insider perspective, with how my peers from other disciplines viewed AI – the outsider perspective. I had noticed their skepticism: concerns about model bias, data privacy, and ethical implications. I intended to address these concerns using what I considered my ‘expert knowledge’ and conclude that we should adopt a more optimistic attitude toward AI.

That was the outline I brought to the peer review session, where my classmates from a range of disciplines exchanged feedback. I presented my outline and took down their comments, as captured in the screenshot below:

Those comments revealed more than I expected. One peer reviewer, An Lin, majoring in history, asked why I was so intent on promoting a positive view of AI. She pointed out that my tone sounded ‘evangelistic’, as though I was trying to persuade people to embrace AI. I had no response. It was a question I had never thought to ask myself, and it lingered with me long after the session ended.

Eventually, I came to a realisation: I had become so invested in AI that I had stopped questioning it. I had begun to dismiss non-technical concerns — assuming that my expertise made me more informed. But their concerns were just as valid as my enthusiasm. By learning what I learnt, and then thinking that was all to it, I became more ignorant. Blind spots had formed in my view of AI.

This realisation unsettled me. It wasn’t just about AI, it was about the way I approached knowledge itself. I had spent years refining my technical skills, but in doing so, I had overlooked other perspectives that were just as important. My blind spot was not just about AI’s impact; it was how narrowly I had defined expertise. I needed to rethink how I learn, and more importantly, who I learn from. True expertise is not just about knowing more; it’s about staying open to what others see that I might miss. And that begins with humility.

I Don’t Know

How did this happen? Four years ago, I joined USP to learn more about the world, to go beyond the boundaries of computer engineering. But along the way, I sometimes lost track of that direction. Learning and excelling at computer engineering, I grew complacent at times. And complacency, I’ve come to realise, is the enemy of growth. I now understand that learning something solely as a discipline is deeply limiting. Surrounded by like-minded peers, seasoned educators and a structured curriculum, I saw only one dimension of knowledge.

USP’s interdisciplinary education changed that. It showed me that technical performance and functionality are just two ways of seeing AI. If I viewed AI through a social science lens, I would see completely different things. Through conversations with peers and professors from diverse fields, and by taking courses outside my discipline, I discovered unexpected connections. Things that once seemed unrelated were, in fact, deeply entangled.

This realisation led me to investigate further the things about AI I hadn’t considered before. After the peer review, in another course on democracy and inequality, I wrote my mid term paper examining the relationship between AI and inequality. Through deliberate thought and research, I learnt how AI automation affects labour markets and exacerbates inequality. AI doesn’t just solve problems — it can create new ones, often for the most vulnerable. My perspective on AI’s performance and functionality were just two pieces of a much larger puzzle, one that includes ethics, social justice, and human impact.

I don’t have a perfect answer for the problems posed by AI — and I doubt I ever will. But acknowledging those problems is essential. As a computer engineer, I must remain humble and listen to others, especially those outside my field. Even when they seem to ‘know less’ about the technology, they may understand something I’ve missed. I must get comfortable with admitting what I don’t know, and have the humility to seek insight from those who do. Because the real danger lies not in ignorance, but in acting beyond your limits — that’s when harm is done.

Here’s the bottom line: everything in the world is connected, and to fully understand one thing is to understand all that is entangled with it. This reflection has not only deepened my understanding of AI — it has transformed my approach to learning itself. I may never fully grasp everything about AI or computer engineering, but that’s alright, as long as I remember that I don’t know everything. As Socrates said, “The only true wisdom is in knowing you know nothing.”